Debaters Behaving Badly, Part 4 - Inaccurately Comparing Datasets

In previous posts in this series, I've tried summarize what I consider bad behavior among those debating climate change. So far I've discussing what I consider statistically unethical practices - using short-term trends, misusing scales, and using local instead of global data. Here I'd like to look how we make comparisons between types of data.

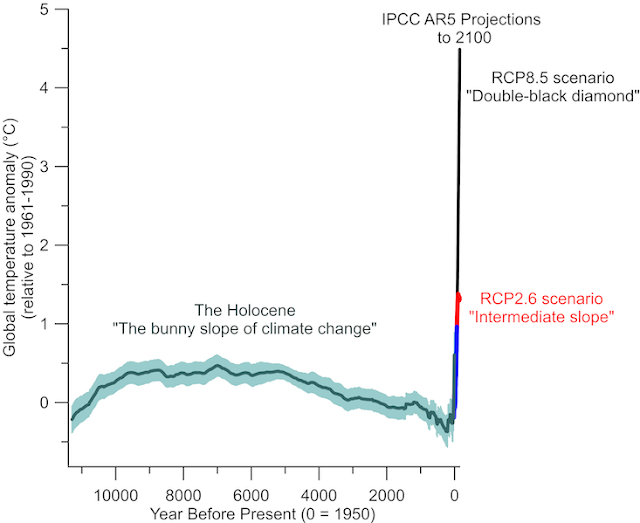

In climate science, we need to deal with all sorts of different kinds of data. For global temperatures, I can think of at least 3 - proxies, the instrumental record (satellites, thermometers), and models (reanalysis, modeled projections, etc). It's perfectly fine to create graphs that include all of these kinds of data, but in doing so, we have to make sure that we are communicating honestly and making comparisons accurately. Proxy data has lower resolution and larger confidence intervals than the instrumental record, and modeled projections always depend in part on the assumed scenario, and confidence intervals for these projections grow with time. My interest in climate change is mostly rooted in my love of geology, so for me I love looking at proxy evidence and how current temperatures compare to the past. But I'm not a fan of seeing graphs like this.

|

| Marcott 2013 with the Instrumental Record and Model Projections |

It's not so much the humor that bothers me (calling the preindustrial Holocene a "bunny slope" is funny). But there are several things here that I do take issue with:

- The GMST y-axis is set to a 1961-1990 baseline, but it's generally accepted to use an 1850-1900 baseline for modeled projections so that we can be consistent with how the values we report compared to this proxy for preindustrial temperatures. Not a big deal; just a pet peeve.

- The data for Marcott 2013 ends in 1940 (20-year centered mean), so it's unclear to me how they set that to the 1961-1990 mean. It for certain can be done, but to do so you'd have to first find a common baseline between Marcott 2013 and the instrumental record and then shift both by an equal amount.

- The name of the instrumental dataset is not given nor whether smoothing is applied.

- The confidence envelope for Marcott 2013 is given, but not the confidence envelope for the modelled projections.

The last one is the most egregious to me. If you want to help me understand how much warming above the warmest temperatures of the preindustrial Holocene we can expect by 2100, I need to know know the CI for the model. Without providing it, it looks like you're trying to make the graph look as scary as possible. Here's another example.

This graph gives the impression that Marcott 2013 contains recent temperatures. It's labeled as if that spike in temperatures is part of Marcott's proxy reconstruction. It's not. His graph ends in 1940, and even the 5'x5' reconstruction does not show this much warming. In my view best practice with his study is to use the RegEM reconstruction, which using infilling and matches the instrumental record more closely. I have a summary of the issues involved with Marcott 2013 here and here, including how robust the last 50 years is and whether possible large spikes in global temperatures would be smoothed out by Marcott's statistical methods. But the author of this graph then plotted 2014 and 2015 from HadCRUT4. However, Marcott 2013 has a resolution of 20 years, so plotting individual years on this graph can give false impressions about how much warmer we are now than the HTM (in this graph, about 6.5K years ago). We can do better than this.Marcott 2013: A Better Approach

I think if we're going to make these kinds of graphs, we need to follow some pretty basic and standard guidelines to ensure that are presenting the data as honestly as possible. Here are some guidelines I follow:

- Plot confidence intervals if available, especially for proxy and modeled data.

- Label the types of datasets used. It should be clear what is proxy, what is instrumental, and what is modeled.

- Match smoothing across datasets. Marcott's data has a resolution of 20 years, so plots of the instrumental data need to match that resolution.

- Set the scales of the x and y axes to allow for the most clarity of presentation, and be clear about the baseline used.

Here is an example of graphs I made using Marcott 2013 and HadCRUT5 data. Notice here that the proxy data has the confidence envelope shown as dotted lines and HadCRUT5 is shown in black, so it's clearly distinguishable from the proxy data. I labeled the graph with the smoothing and baseline clearly indicated.

Before publishing this on my blog, I also checked to see if what I was plotting made sense. Here is the overlap between Marcott 2013 proxy and the HadCRUT5 data.

The above graph shows HadCRUT5 both with 20-year smoothing and without. The overlap with Marcott 2013 is the dots (my spreadsheet didn't automatically connect them). As you can see, though, even the annual data fits in the confidence envelope for Marcott 2013 using the RegEM reconstruction. Even though the overlap isn't for that many years, it does give me confidence that I've matched both to the 1850-1900 baseline accurately and the two agree with each other when they overlap. So using my first graph above with Marcott 2013 and a 20-year smoothed HadCRUT5, we can see that current temperatures are ~1 C above the 1850-1900 mean and about 0.3 C warmer than the upper confidence envelope for the HTM. So I feel confident in saying that current temperatures are "likely 0.3 C warmer than at any point in the preindustrial Holocene." Warming is continuing at 0.2 C/decade, so we are leaving the temperatures of the preindustrial Holocene in the dust at a rapid pace.Pages 2K: A Better Approach

We can do the same thing with the Pages 2K dataset and HadCRUT5. I plotted both to the 1850-1900 mean. Since Pages 2K has annual resolution, I plotted HadCRUT5's annual data. Using the confidence envelope for Pages 2K, I get the following results.

Here I zoomed in on the overlap between the two datasets. You can see remarkable agreement between them, but HadCRUT5 definitely shows more variability than Pages 2K during the years they overlap. However, none of the HadCRUT5 temperatures fall outside the confidence envelope for Pages 2K.

From this, we can see that current temperatures are about 1.2 C warmer than the 1850-1900 mean. The warmest preindustrial temperature in Pages 2K (the top of the confidence envelope for the warmest years) is about 0.8 C. Because of this, I can feel confident that I'm being accurate to the data by saying that currently GMST is likely 0.4 C warmer than at any point in the last 2000 years, with temperatures currently increasing at 0.2 C/decade.

Conclusion

As a general rule of thumb, I think it's advantageous to make claims that can't rationally be accused of "alarmism." I'm not going to post model projections without confidence intervals. I'd much rather stick to the empirical data and state what can be likely concluded from that data accurately. I'll still be called an alarmist, but those that do will have no rational basis for saying so.

.png)

.png)

Comments

Post a Comment