Patrick Frank Publishes on Errors Again

Recently I came across yet another paper by Patrick Frank[1] attempting to claim that climate scientists have been underestimating uncertainties in climate-related data. In this paper, he takes aim at GMST data, and he argues that

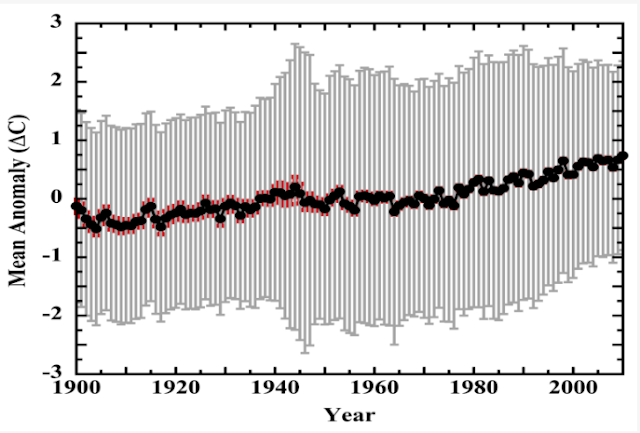

LiG resolution limits, non-linearity, and sensor field calibrations yield GSATA mean ±2σ RMS uncertainties of, 1900–1945, ±1.7 °C; 1946–1980, ±2.1 °C; 1981–2004, ±2.0 °C; and 2005–2010, ±1.6 °C. Finally, the 20th century (1900–1999) GSATA, 0.74 ± 1.94 °C, does not convey any information about rate or magnitude of temperature change.

The resulting GMST graph from his calculations is below.

Essentially, he's saying that errors associated with liquid in glass thermometers are so large that we can have no confidence in the global warming trend in the major GMST datasets. Of course, the organizations producing these GMST datasets all evaluate the uncertainties associated with their anomaly values, and their estimates are invariably much smaller - about ±0.05°C in recent decades gradually increasing to ±0.15°C in the late 19th century.[2] These values are also both assessed and published in the peer-reviewed literature. Are all these estimates wrong? Has Patrick Frank discovered something that none of those working with the data have found before? Let's see.

Defining Terms

First let's cover a brief overview of the statistics involved, in very general terms. Frank is attempting to calculate the root-mean-square (RMS) uncertainty at 95% confidence for GMST anomalies. In another post, I talk more about that this means, but essentially what we're after with this calculation is that, given the calculated mean value, we can be 95% confident that the true value falls within the range calculated. So if Frank is right that the during the 20th century the globe warmed 0.74 ± 1.94°C, then we can have very little confidence that the globe has warmed at all, since the range of ± 1.94°C is larger than the calculated mean of 0.74°C. The true value could actually be a negative value to 95% confidence.

Statisticians assess this through calculating a standard deviation (σ). Assuming a normal distribution, 1σ from the mean in both directions will contain 68.2% of the values in the distribution, while a 2σ range includes 95.4% of values the distribution. To calculate the 95% uncertainty, therefore, you can take your 1σ value and multiply it by 1.96; the 95% uncertainty range is slightly less than 2σ. Often this is described generally as the 68-95-99.7 rule, the approximate values for 1σ, 2σ, and 3σ. In many sciences, the 95% uncertainty or ~2σ confidence level is what is required to assess statistical significance.

Some of the issues in this paper come from Fank's attempts to calculate the uncertainty of mean values, given the σ values for the parts of that mean. For instance, to calculate average temperature for a day, scientists simply average the maximum and minimum temperatures for the day.

Tavg = (Tmax + Tmin)/2 or

Tavg = (1/2)*Tmax + (1/2)*Tmin

So the question is, how do you calculate the uncertainty for Tavg given values for σ for Tmax and Tmin? To assess the uncertainty for this average, we can use the following formula

σf^2 = a^2*σ^2a + b^2σ^2b, where

σf = is the 1σ uncertainty of the average

a = fraction of average for thing a

σa = standard deviation for thing a

b = fraction of average for thing b

σb = standard deviation for thing b

Since σf is squared (technically this is variance), we would take the square root of our results of the calculations on the right hand side of the equation to get the 1σ uncertainty. We can then multiply that value by 1.96 to get the 95% uncertainty. If there are more than 2 terms in the average, we can address this as follows:

σf^2 = a^2*σ^2a + b^2σ^2b + ... + n^2σ^2n

Now what happens if, say you want to calculate the uncertainty of an average, and the uncertainties for each part of the average is the same (σa = σb = ... = σn) and each term contains an equal fraction (a = b = ... = n)? In this case you can simplify the formula substantially to

σf^2 = σ^2/n

Where n is the number of terms. This works because you can distribute out all the σ^2 values, and you're left with all the a^2, b^2, etc values that add up to n/n^2 = 1/n. What we really want to calculate though, is not simply the ~2σ uncertainty. The calculation should be the standard error of the mean (SEM), which is σ/sqrt(N), where N is the number of samples. As we'll see this paper doesn't get that far and ignores 1/sqrt(N) altogether.

The "Misprint" in Frank's Paper

When I'm looking at Frank's paper, equation (6) struck me as odd. Look carefully at how its written.

It turns out that others have noticed this too on Skeptical Science, and there's much more to be said about this. From the Skeptical Science summary, I think we can detect where Frank's error began. It begins in equation (4), where Frank incorrectly wrote the formula (making the same mistake he made in equation 6) but he got the right answer.

The solution to this equation is ±0.541°C, so why did he say it's ±0.382°C? Notice he's calculating the ~2σ uncertainty for Tavg based on the uncertainties for the Tmax and Tmin values, but he didn't square the a and b terms, both of which are 1/2 (since day and night average half the day). The variance equation should be σ^2 = (1/2)^2*(0.366^2) + (1/2)^2*(0.135^2) , which simplifies to (0.366^2 + 0.135^2)/4, and if you take the SQRT of that and multiply by 1.96, you get ±0.382°C. Frank published a comment to his paper pointing out that the 2 should have been outside the radical, and calls it a misprint. Sure enough, if he took the 2 outside the radical, he'd get the right answer, but technically, given the logic of what he's calculating, it would be better to write it as a 4 inside the radical.

And it's this misprint (error?) that explains Frank's mistake in equation 6. The 12 in the denominator should have been 12^2. That is, inside the radical the equation should be (12/12^2)*(0.198^2), which simplifies to (0.198^2)/12. In equation 4, he printed the misprint but calculated the correct result because he didn't calculate the result from the equation he wrote. In equation 6, he calculated the incorrect result from the "misprint" in the equation. And he did the same thing in equation 5.

SEM and 1/sqrt(N)

Correlated and non-normal systematic errors violate the assumptions of the central limit theorem, and disallow the statistical reduction of systematic measurement error as 1/√𝑁.

But anomalies involve subtracting one number from another, not adding the two together. Remember: when subtracting, you subtract the covariance term. It’s σf^2 = a^2*σ^2a + b^2*σ^2b - 2ab*σab. If it increases uncertainty when adding, then the same covariance will decrease uncertainty when subtracting. That is one of the reasons that anomalies are used to begin with!

Typo in RHS of eqn below (7): 219 --> .219

ReplyDeleteBetter fix it before Frank catches it!! :-)

Ha! Fixed. Thanks; that was a close one :-).

Delete"σf^2 = a^2*σ^2a + b^2σ^2b" is the wrong equation. You're treating variances as though they are measurements.

ReplyDeleteEqn. 4 expresses the mean of two variances, V_u = (V_u1+V_u2)/2. It is not the sum of two measurements.

The rest of your analysis also merely repeats the SkS train wreck. I've already worked through it. It's misconceived and wrong throughout. But SkS disallows debate, making them intellectual cowards. You may be different. But if you encourage an actual debate, I can tell you right now that the posted analysis will go down in flames.

It's also clear you got your stuff from SkS, Scott. So, it's not that they noticed it too. It's that you got your analysis from them, and didn't acknowledge the source.

σf^2 = a^2*σ^2a + b^2σ^2b is the correct equation for 4-7, and you agreed 4, since you accidentally got the right answer with that equation, but got it wrong for 5-7. All you did in equations 5-6 is convert 1σ uncertainty to 95% uncertainty. Your paper is wrong. After finding your mistake, I did more research and found the SkS article that had also found it; it turns out lots of people have pointed this out to you. I agree there's nothing special about my post; it's completely obvious, and lots of people have noticed it. You're also wrong to remove /sqrt(N) from your calculations.

DeleteAlso note that this is NOT the equation you used in equation (4): "V_u = (V_u1+V_u2)/2." Notice that if you take the square root of this, you'd have a 2 inside the radical, but you admitted that was a "misprint." The equation you actually solved was V_u = (V_u1+V_u2)/4, which is identical to the equation I gave you, and that's the equation that gave you the right answer, not the one you wrote in the text.

DeleteEqn. 4 is a mean of variances. Eqns. 5&6 are RMS. They are not the same.

DeleteThe correct answer from eqn. 4 was gotten because I did the calculation correctly, not by accident. The division by 2 *must* be outside the the square root in order to retain the relative magnitude of the uncertainty to the resulting mean. You apparently don't know that.

The equation I "actually solved" was, sqrt(V_u₁²+V_u₂²)/2. The sqrt is butt up against the parenthesis and operates first, division by 2, second. First year algebra.

About Eqn. 7: You are invited to consult p. 3475 of Rohde & Hausfather The Berkeley Earth Land/Ocean Temperature Record. Earth Syst. Sci. Data, 2020. 12(4), 3469-3479; doi: 10.5194/essd-12-3469-2020, where you will find:

"Uncertainties for the combined record are calculated by assuming the uncertainties in LAT and SST time series are independent and can be combined in proportion to the relative area of land and ocean."

Likewise, p. 2623-4 in Folland, et al., Global Temperature Change and its Uncertainties Since 1861. Geophys. Res. Lett., 2001. 28(13): p. 2621-2624; doi:10.1029/2001GL012877, has:

"We calculated separate RSOA [reduced space optimal averaging] uncertainties for the hemispheres, but used time series of the remaining global uncertainties weighted according to the land- and ocean-based data area fraction in each hemisphere."

You may come to realize that the two quoted descriptions of method are eqn. 7 in words.

Your arguments are ill-conceived, Scott.

Also, none of the uncertainties stem from random error. The assumptions of Gaussian statistics are violated. Applying 1/sqrt(N) is wrong.

DeleteAfter you corrected eqn 4, it was not, "V_u = (V_u1+V_u2)/2," as you said above. It was actually "V_u = (1/2)^2*(V_u1) + (1/2)^2*(V_u2)," which agrees with the equation I wrote above and you said was wrong. It simplifies to V_u = (V_u1+V_u2)/4. If you take the sqrt of it, you would get "sqrt(V_u1 + V_u2)/2" as I pointed out above (again you got it wrong). You accidentally got the answer right here by solving the equation you wrote incorrectly. Then you fixed a "misprint" to get the answer to work out. What I wrote above was exactly correct, and then you got Eqn 4 wrong AGAIN in your first comment here.

DeleteThe equation you should have used in Eqns 5&6 is what I derived for you so you could correct your paper. If you doubt me check Bevington's book on statistics, page 58. The equation is 4.23, and I got it right. What you did simplifies to be a trivial σf = σ. You didn't actually calculate anything except to convert 1σ to 95% uncertainty.

On Eqn 7, your quotes don't show you to be correct. Of course they are combined relative to the portions of land vs SST area, but the terms should be squared with the variance terms, just like you squared (1/2)^2 in eqn 4.

This is objectively false: "none of the uncertainties stem from random error." All measurements include random error, and biases can be quantified and therefore corrected. You would have to imagine that the instrumental record not only contains biases, but also that they happen to be INCREASING through time, and dominated towards forming a warming trend. But globally bias correction reduces the global warming trend.

Delete"It was actually "V_u = (1/2)^2*(V_u1) + (1/2)^2*(V_u2)," Dyslexia. Solving eqn. 4 the way it is written yields 0.541. One can't "accidentally" get the right answer from the wrong equation.

DeleteEqns. 5&6 are RMS. Agreed they're trivial, but are necessarily there for completeness.

Bevington eqn. 4.23 is the reduction of uncertainty when averaging measurements with random iid error. Virtually all of Bevington's analyses and equations are strictly valid only for random error.

.

However, none of the errors considered in LiG Met. are random. They're systematic with non-normal distributions. The statistical assumptions of 4.23 are violated, and it cannot be used.

Bevington 4.22 (which will likely be your fallback) is the equation for the uncertainty in a mean. But I used eqns. 5&6 because the analysis required the mean of the uncertainty. They are not the same.

"All measurements include random error, ..." Equation 4 is dealing with the inherent instrumental uncertainty of a 1C/division thermometer. Not with measurements. Those particular uncertainties have no random component. If you'd read the paper, you'd know that.

You're criticizing the paper without having studied it, aren't you, Scott.

"...biases can be quantified and therefore corrected." Only when one knows them. Which they do not. If you'd read LiG Met. with any sort of care, you'd know that, too.

Systematic field measurement error from environmental variables is not random (demonstrated) and the error biases are not known because the measurement errors have not been determined. They've all just been hand-waved away.

You got eqn 4 wrong in the paper and then again in your first comment here. If you solve the wrong equation incorrectly, you could accidentally get the right answer. Eqns 5-6 are not RMS; you simply multiplied 1σ by 1.96. You literally just multiplied the σ value by n/n, then squared σ and then took the sqrt of σ^2. All completely unnecessary, and did nothing whatsoever. You don't have to be trivial to be complete. The equation you should have used was 4.23 in Bevington: σf^2 = (σ^2)/N; that's how you calculate the variance of the mean, and if you take the sqrt, you get the standard error of the mean, which is what you should have calculated. I honestly don't think you know the difference between random and systematic errors, and if these are non-normal distributions, you shouldn't be using the 68-95-99.7 rule anyway.

Delete"If you'd read LiG Met. with any sort of care..." With all do respect, if you WROTE your paper with any sort of care, you wouldn't make these blunders. Eqn 4 you wrote wrong, then blamed it on a misprint, but then repeated the same wrong equation above. Eqn 6 you wrote wrong in the exact same way but solved it wrong too. The actual solution to the eqn 6 you wrote is 0.388, not 0.382, and fixing the eqn 6 to what it should be reduces it to 0.112. It seems it's really hard for you to get any equation right. Above you wrote, "The equation I 'actually solved' was, sqrt(V_u₁²+V_u₂²)/2." Hardly. Do you really want me to believe the squared variances, added them, then took the sqrt, then divided by 2? And you're telling me to read with care? Perhaps the problem is there are way too many people reading your paper with care, and that's why so many people are pointing out the same exact errors. So please don't lecture me about care; you've been pretty careless in your paper.

"The equation you should have used was 4.23 in Bevington: σf^2 = (σ^2)/N; that's how you calculate the variance of the mean"

DeleteFor measurements with random errors *only*. In LiG Met., none of the errors I assess have a normal distribution. That's a demonstrated fact. Which you've ignored repeatedly.

"0.112." Wrong yet again. Eqns. 5&6 are root-mean-square. The division is by N, not N².

You're insistently wrong, Scott. You've implicitly admitted to have not read the paper. From your arguments you don't understand the logic of the analysis.

For anyone interested and reading here, the full refutation of Scott's nonsense, and Bob Loblaw, jg's at SkS, can be found here:

https://notrickszone.com/2023/08/24/dr-patrick-franks-refutation-of-a-sks-critique-attempt-loblaws-24-mistakes-exposed/

Compare for yourself.

All measurements have random errors, and if you're right that none of the errors you assess have a normal distribution, then you shouldn't be using the 68-95-99.7 rule, which would mean your paper has even more problems. Here's a very elegant derivation of the equation for the variance of the mean you should have used. Since you apparently can't be bothered to read Bevington carefully, maybe you'll watch it on Youtube. She begins a little before I do above, and she explains it with more details.

Deletehttps://www.youtube.com/watch?v=XymFs3eLDpQ

I'd also encourage you to go back and read the sections you quoted from in the two papers you cited above. If you read those with care, you'll see that they did these calculations correctly.